Retrieval-augmented generation (RAG), a technique that enhances the efficiency of large language models (LLMs) in handling extensive amounts of text, is critical in natural language processing, particularly in applications such as question-answering, where maintaining the context of information is crucial for generating accurate responses. As language models evolve, researchers strive to push the boundaries by improving how these models process and retrieve relevant information from large-scale textual data.

One main problem with existing LLMs is their difficulty in managing long contexts. As the context length increases, the models need help to maintain a clear focus on relevant information, which can lead to a significant drop in the quality of their answers. This issue is particularly pronounced in question-answering tasks, where precision is paramount. The models tend to get overwhelmed by the sheer volume of information, which can cause them to retrieve irrelevant data, diluting the answers’ accuracy.

In recent developments, LLMs like GPT-4 and Gemini have been designed to handle much longer text sequences, with some models supporting up to 1 million tokens in context. However, these advancements come with their own set of challenges. While long-context LLMs can theoretically handle larger inputs, they often introduce unnecessary or irrelevant chunks of information into the process, resulting in a lower precision rate. Thus, researchers are still seeking better solutions to effectively manage long contexts while maintaining answer quality and efficiently using computational resources.

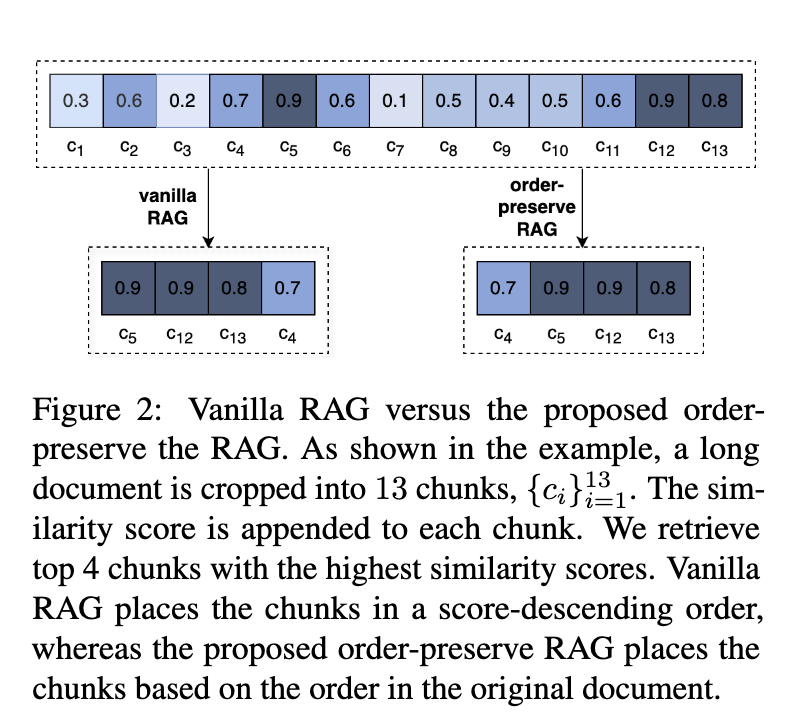

Researchers from NVIDIA, based in Santa Clara, California, proposed an order-preserve retrieval-augmented generation (OP-RAG) approach to address these challenges. OP-RAG offers a substantial improvement over the traditional RAG methods by preserving the order of the text chunks retrieved for processing. Unlike existing RAG systems, which prioritize chunks based on relevance scores, the OP-RAG mechanism retains the original sequence of the text, ensuring that context and coherence are maintained throughout the retrieval process. This advancement allows for a more structured retrieval of relevant information, avoiding the pitfalls of traditional RAG systems that might retrieve highly relevant but out-of-context data.

The OP-RAG method introduces an innovative mechanism that restructures how information is processed. First, the large-scale text is split into smaller, sequential chunks. These chunks are then evaluated based on their relevance to the query. Instead of ranking them solely by relevance, OP-RAG ensures that the chunks are kept in their original order as they appeared in the source document. This sequential preservation helps the model focus on retrieving the most contextually relevant data without introducing irrelevant distractions. The researchers demonstrated that this approach significantly enhances answer generation quality, particularly in long-context scenarios, where maintaining coherence is essential.

The performance of the OP-RAG method was thoroughly tested against other leading models. The researchers from NVIDIA conducted experiments using public datasets, such as the EN.QA and EN.MC benchmarks from ∞Bench. Their results showed a marked improvement in both precision and efficiency compared to traditional long-context LLMs without RAG. For example, in the EN.QA dataset, which contains an average of 150,374 words per context, OP-RAG achieved a peak F1 score of 47.25 when using 48K tokens as input, a significant improvement over models like GPT-4O. Similarly, on the EN.MC dataset, OP-RAG outperformed other models by a considerable margin, achieving an accuracy of 88.65 with only 24K tokens, whereas the traditional Llama3.1 model without RAG could only attain 71.62 accuracy using 117K tokens.

Further comparisons showed that OP-RAG improved the quality of the generated answers and dramatically reduced the number of tokens needed, making the model more efficient. Traditional long-context LLMs, such as GPT-4O and Gemini-1.5-Pro, required nearly double the number of tokens compared to OP-RAG to achieve lower performance scores. This efficiency is particularly valuable in real-world applications, where computational costs and resource allocation are critical factors in deploying large-scale language models.

In conclusion, OP-RAG presents a significant breakthrough in the field of retrieval-augmented generation, offering a solution to the limitations of long-context LLMs. By preserving the order of the retrieved text chunks, the method allows for more coherent and contextually relevant answer generation, even in large-scale question-answering tasks. The researchers at NVIDIA have shown that this innovative approach outperforms existing methods in terms of quality and efficiency, making it a promising solution for future advancements in natural language processing.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and LinkedIn. Join our Telegram Channel.

If you like our work, you will love our newsletter..

Don’t Forget to join our 50k+ ML SubReddit

Nikhil is an intern consultant at Marktechpost. He is pursuing an integrated dual degree in Materials at the Indian Institute of Technology, Kharagpur. Nikhil is an AI/ML enthusiast who is always researching applications in fields like biomaterials and biomedical science. With a strong background in Material Science, he is exploring new advancements and creating opportunities to contribute.