In the AI development ecosystem, specifically, the AI model training phase, it is the responsibility of humans to detect and mitigate such concerns and pave the way for seamless learning and performance of models. Let’s further break down the responsibilities of humans.

Human-enabled Strategic Approaches To Fixing AI Reliability Gaps

The Deployment Of Specialists

It is on stakeholders to identify a model’s flaws and fix them. Humans in the form of SMEs or specialists are critical in ensuring intricate details are addressed. For instance, when training a healthcare model for medical imaging, specialists from the spectrum such as radiologists, CT scan technicians, and others must be part of the quality assurance projects to flag and approve results from models.

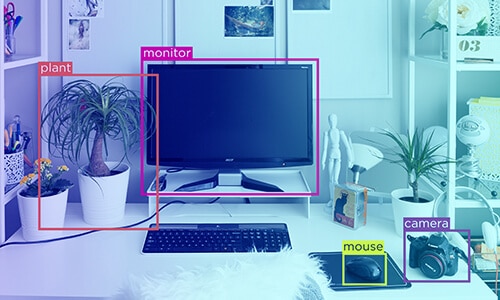

The Need For Contextual Annotation

AI model training is nothing without annotated data. As we know, data annotation adds context and meaning to the data that is being fed, enabling machines to understand the different elements in a dataset – be it videos, images, or just text. Humans are responsible for providing AI models with such context through annotations, dataset curation, and more.

The XAI Mandate

AI models are analytical and partially rational. But they are not emotional. And abstract concepts like ethics, responsibilities, and fairness incline more toward emotional tangents. This is why human expertise in AI training phases is essential to ensure the elimination of bias and prevent discrimination.

Model Performance Optimization

While concepts like reinforced learning exist in AI training, most models are deployed to make the lives of humans easier and simpler. In implementations such as healthcare, automotive, or fintech, the role of humans is crucial as it often deals with the sensitivity of life and death. The more humans are involved in the training ecosystem, the better and more ethical models perform and deliver outcomes.

The Way Forward

Keeping humans in model monitoring and training phases is reassuring and rewarding. However, the challenge arises during the implementation phase. Often, enterprises fail to find specific SMEs or match the volume requirements of humans when it comes to at-scale capabilities.

In such cases, the simplest alternative is to collaborate with a trusted AI training data provider such as Shaip. Our expert services involve not only ethical sourcing of training data but also stringent quality assurance methodologies. This enables us to deliver precision and high-quality datasets for your niche requirements.

For every project we work on, we handpick SMEs and experts from relevant streams and industries to ensure airtight annotation of data. Our assurance policies are also uniform across the different formats of datasets required.

To source premium-quality AI training data for your projects, we recommend getting in touch with us today.