Researchers from the MIT Computer Science and Artificial Intelligence Laboratory (CSAIL) and Google Research introduced the “Alchemist,” a model that offers unprecedented precision in controlling material properties within images. This innovative tool addresses a significant challenge faced by users of text-to-image generative models: achieving detailed and accurate material properties.

Alchemist allows users to modify four key attributes of both real and AI-generated pictures:

- Roughness

- Metallicity

- Albedo

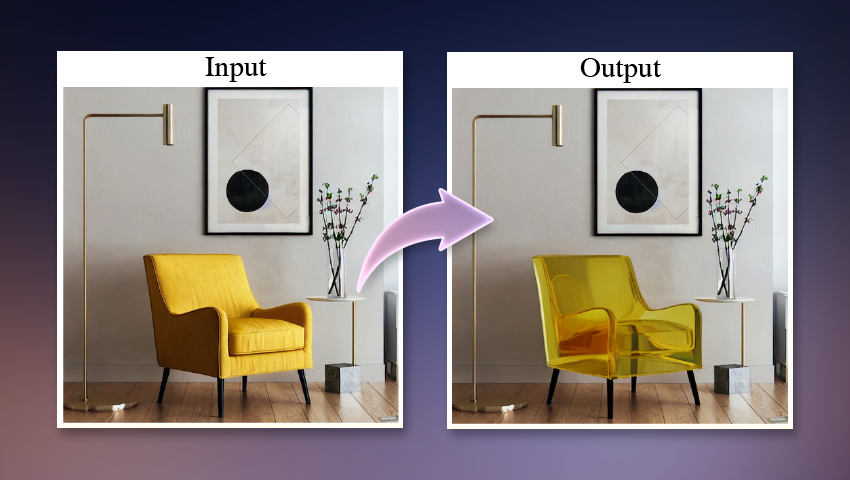

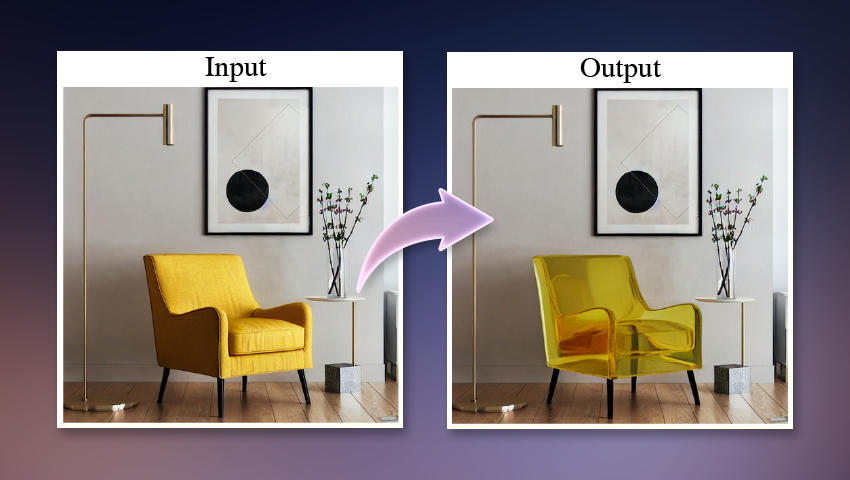

- Transparency

Alchemist takes any photo as input and allows users to adjust each property within a continuous scale of -1 to 1, creating a new visual. The magic behind it lies in its denoising diffusion model, specifically Stable Diffusion 1.5. This text-to-image model is known for its photorealistic results and editing capabilities. Unlike previous diffusion systems that focused on higher-level changes (such as swapping objects or altering image depth), Alchemist hones in on low-level attributes. Its unique slider-based interface outperforms other methods, allowing precise adjustments to material properties.

Alchemist’s design capabilities promise significant advancements in various fields:

- Video Game Design: Alchemist could be used to modify video game models, adapting them to different environments or enhancing their realism.

- Visual Effects (VFX): By adjusting material properties, Alchemist could expand the capabilities of AI in visual effects, making scenes more convincing and immersive.

- Robotic Training Data: By exposing robots to a wider range of textures, they can better understand and manipulate diverse items in real-world scenarios. Additionally, Alchemist’s capabilities in image classification could aid in identifying where neural networks struggle to recognize material changes, thus improving the accuracy of these systems.

In comparative studies, Alchemist outperformed similar models by accurately editing only the specified object of interest. For instance, when tasked with making a dolphin fully transparent without altering the ocean background, Alchemist was the only model to achieve this precisely. User studies have shown a preference for Alchemist, with many finding its outputs more photorealistic than those of its counterparts.

To overcome the impracticality of collecting real data, the researchers trained Alchemist on a synthetic dataset. This dataset involved randomly editing material attributes of 1,200 materials applied to 100 unique 3D objects in Blender, a popular computer graphics tool.

Despite its advancements, Alchemist has some limitations, particularly in accurately inferring illumination, which can lead to physically implausible results. For example, at maximum transparency settings, a hand partially inside a cereal box may appear as a clear container without visible fingers.

The research team aims to expand Alchemist’s capabilities. Future work may focus on improving 3D assets for graphics at the scene level and inferring material properties from images, potentially linking visual and mechanical traits.

Watch our YouTube video for a brief demonstration of the Alchemist’s magic in action.