Key measures companies are implementing to adhere to evolving regulatory regulations

Companies are actively taking various steps to adhere to the evolving regulations and guidelines concerning artificial intelligence (AI). These efforts are not only aimed at compliance but also at fostering trust and reliability in AI technologies among users and regulators. Here are some of the key measures companies are implementing:

Establishing Ethical AI Principles

Many organizations are developing and publicly sharing their own set of ethical AI principles. These principles often align with global norms and standards, such as fairness, transparency, accountability, and respect for user privacy. By establishing these frameworks, companies set a foundation for ethical AI development and use within their operations.

Creating AI Governance Structures

To ensure adherence to both internal and external guidelines and regulations, companies are setting up governance structures dedicated to AI oversight. This can include AI ethics boards, oversight committees, and specific roles like Chief Ethics Officers who oversee the ethical deployment of AI technologies. These structures help in assessing AI projects for compliance and ethical considerations from the design phase through deployment.

Implementing AI Impact Assessments

Similar to Data Protection Impact Assessments under GDPR, AI impact assessments are becoming a common practice. These assessments help identify potential risks and ethical concerns associated with AI applications, including impacts on privacy, security, fairness, and transparency. Conducting these assessments early and throughout the AI lifecycle enables companies to mitigate risks proactively.

Investing in Explainable AI (XAI)

Explainability is a key requirement in many AI guidelines and regulations, especially for high-risk AI applications. Companies are investing in explainable AI technologies that make the decision-making processes of AI systems transparent and understandable to humans. This not only helps in regulatory compliance but also builds trust with users and stakeholders.

Engaging in Ongoing Training and Education

The fast-evolving nature of AI technology and its regulatory environment requires continuous learning and adaptation. Companies are investing in ongoing training for their teams to stay updated on the latest AI advancements, ethical considerations, and regulatory requirements. This includes understanding the implications of AI in different sectors and how to address ethical dilemmas.

Participating in Multi-Stakeholder Initiatives

Many organizations are joining forces with other companies, governments, academic institutions, and civil society organizations to shape the future of AI regulation. Participation in initiatives like the Global Partnership on AI (GPAI) or adherence to standards set by the Organisation for Economic Co-operation and Development (OECD) allows companies to contribute to and stay informed about best practices and emerging regulatory trends.

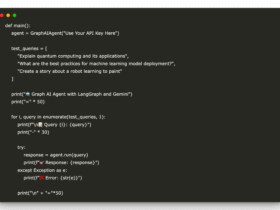

Developing and Sharing Best Practices

As companies navigate the complexities of AI regulation and ethical considerations, many are documenting and sharing their experiences and best practices. This includes publishing case studies, contributing to industry guidelines, and participating in forums and conferences dedicated to responsible AI.

These steps illustrate a comprehensive approach towards responsible AI development and deployment, aligning with global efforts to ensure that AI technologies benefit society while minimizing risks and ethical concerns. As AI continues to advance, the approaches to adherence and compliance will likely evolve, requiring ongoing vigilance and adaptation by companies.