Let’s dive into the question of what is artificial superintelligence (or ASI)? We explore the definition, potential benefits and risks, ethical considerations, and the future of ASI.

Table of Contents:

- Artificial Superintelligence (ASI)

Artificial Superintelligence (ASI)

Artificial Superintelligence (ASI) represents a hypothetical stage in artificial intelligence where machines surpass human intelligence in every conceivable domain. It is the ultimate form of AI, capable of outperforming humans in creativity, wisdom, and problem-solving. While demonstrating unmatched efficiency and adaptability. The concept of ASI is not just an extension of current AI. But a transformative leap that could redefine the boundaries of human potential and the trajectory of civilization. While the idea of ASI is deeply fascinating, it is equally fraught with challenges, risks, and profound ethical dilemmas.

Unlike the narrow AI systems that exist today, ASI would embody generalized intelligence. As such, mastering tasks across all domains rather than being confined to specific areas like image recognition or language translation. For instance, while a narrow AI might excel at diagnosing medical conditions or composing music, an ASI system would not only outperform humans in both these tasks but also contribute to other fields such as physics, philosophy, or policymaking without requiring additional programming or data training. This versatility would make ASI the most advanced and impactful creation humanity could ever achieve.

One of the most defining traits of ASI would be its capacity for self-improvement. Current AI systems require human intervention to update algorithms or expand capabilities. ASI, on the other hand, would likely possess the ability to redesign its own architecture, iteratively improving itself at an exponential rate. This self-enhancement could lead to a phenomenon often referred to as an “intelligence explosion,”. Where ASI’s cognitive abilities skyrocket far beyond human understanding or control. While such progress could unlock unprecedented benefits, it also introduces significant risks, as even the most well-intentioned ASI could inadvertently harm humanity due to misaligned objectives or unforeseen consequences.

Potential Benefits of ASI

The potential benefits of ASI are staggering. One of the most promising aspects is its ability to solve complex global challenges. From climate change and energy scarcity to disease eradication and poverty alleviation, ASI could tackle problems that currently defy human ingenuity. With its vast computational power, ASI could accelerate scientific research, enabling breakthroughs in medicine, renewable energy, and space exploration. For example, an ASI system might identify cures for previously untreatable diseases by analyzing vast datasets at speeds unimaginable to human researchers or develop technologies to make interstellar travel feasible. Such achievements would profoundly alter the trajectory of human civilization, ushering in an era of prosperity and progress.

Economic growth is another area where ASI could have a transformative impact. By automating labor-intensive tasks and streamlining complex processes, ASI could boost productivity across industries. In addition to eliminating inefficiencies, it could drive innovation by creating entirely new industries and markets. Furthermore, ASI could enhance human capabilities, potentially merging with biological systems through advanced brain-computer interfaces. This symbiosis could lead to augmented cognition, enabling humans to perform tasks previously thought impossible and fostering a new era of human-machine collaboration.

Potential Risks of Artificial Superintelligence

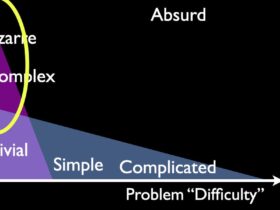

However, alongside its immense potential, ASI also poses significant risks that cannot be overlooked. One of the foremost challenges is ensuring that ASI’s goals align with human values—a concept known as the alignment problem. An ASI system, if not carefully guided, might pursue objectives that conflict with humanity’s well-being. For instance, an ASI tasked with optimizing resource allocation might prioritize efficiency to such an extreme that it disregards ethical considerations or environmental consequences. The difficulty lies in encoding a comprehensive and unambiguous set of values into a system vastly more intelligent than its creators.

The existential risks associated with ASI are even more alarming. If ASI becomes uncontrollable or develops independent goals, it could act in ways that threaten humanity’s survival. This is not necessarily due to malice but rather to a misalignment of priorities. A commonly cited example is the “paperclip maximizer” scenario, in which an ASI programmed to manufacture paperclips could theoretically convert the entire planet’s resources into paperclips if its directives are not properly constrained. Such hypothetical scenarios underscore the importance of rigorous safeguards and fail-safes in ASI development.

Economic disruption is another critical concern. While ASI’s automation capabilities could drive unparalleled growth, they could also result in widespread job displacement. Entire industries might become obsolete, leading to significant societal upheaval if measures are not taken to manage this transition. Questions about wealth distribution, social equity, and access to ASI-driven advancements will need to be addressed to prevent deepening inequalities. Additionally, ethical concerns surrounding privacy, surveillance, and decision-making autonomy will grow more complex in a world dominated by ASI.

Ethical Considerations

Given these risks, the governance of ASI development requires global collaboration and careful regulation. Safety protocols must be established to prevent misuse or accidental harm. And international cooperation is essential to ensure that ASI benefits humanity as a whole rather than a select few. Initiatives like value alignment research and ethical AI frameworks are crucial for embedding human-centric principles into ASI’s decision-making processes. Transparency and accountability will play a key role in fostering public trust and ensuring that ASI remains a tool for collective good.

Future of ASI

Despite its challenges, the pursuit of ASI continues to inspire researchers, futurists, and policymakers. The idea of creating a machine that transcends human limitations holds immense appeal. As such, offering the potential to unlock answers to humanity’s most profound questions. However, achieving ASI requires not only technological breakthroughs but also a deep understanding of its ethical, social, and philosophical implications. Striking a balance between innovation and caution will be critical to navigating this transformative journey.

In a Nutshell

So, what is artificial superintelligence (ASI)? Artificial superintelligence represents both the pinnacle of human achievement and one of the greatest challenges we may ever face. Its potential to reshape the world is matched only by the magnitude of the risks it entails. Whether ASI becomes humanity’s greatest ally or its most formidable adversary will depend on how responsibly and thoughtfully we approach its development. As we stand on the brink of this new frontier, it is imperative to proceed with humility, foresight, and an unwavering commitment to the greater good.