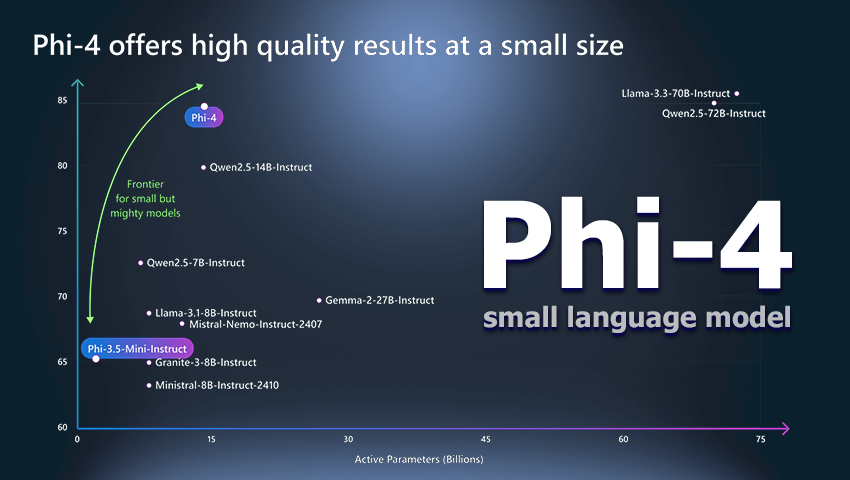

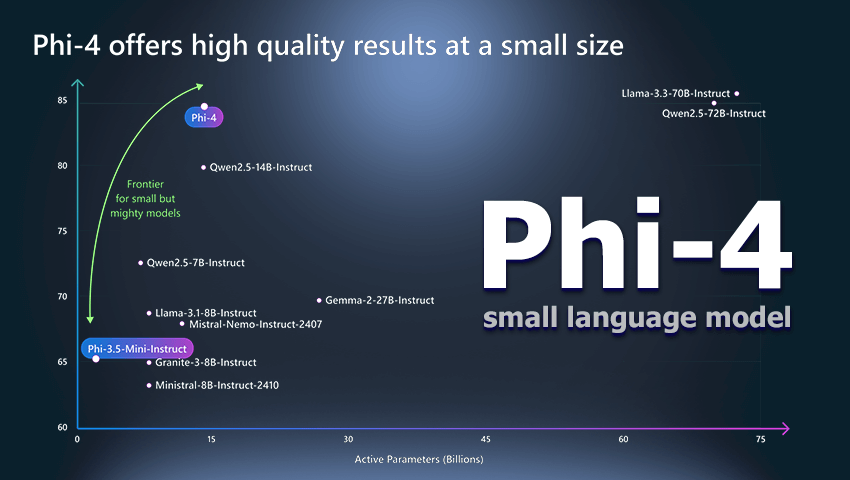

Microsoft has introduced the generative AI model Phi-4 with fully open weights on the Hugging Face platform. Since its presentation in December 2024, Phi-4 has garnered attention for its enhanced performance in mathematical computations and multitask language understanding, while requiring fewer computational resources than larger models.

Phi-4, boasting 14 billion parameters, is designed to compete with models like GPT-4o mini, Gemini 2.0 Flash, and Claude 3.5 Haiku.

This Small Language Model (SLM) is optimized for complex mathematical calculations, logical reasoning, and efficient multitasking. Despite its smaller size, Phi-4 delivers high performance, processes long contexts, and is ideal for applications that demand precision and efficiency. Another standout feature is its MIT license, allowing free use, modification, and distribution, even for commercial purposes.

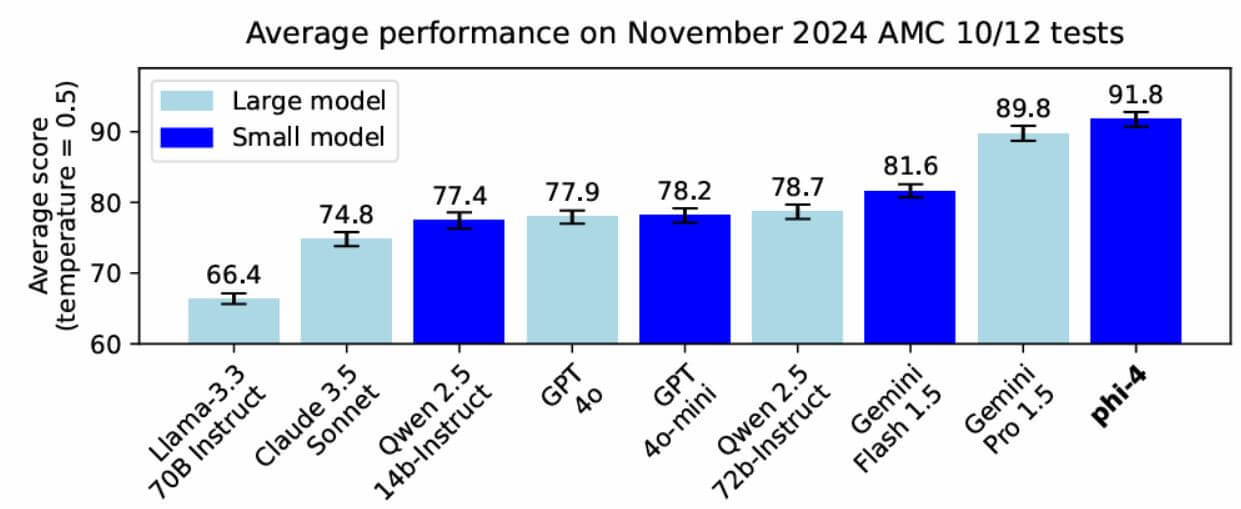

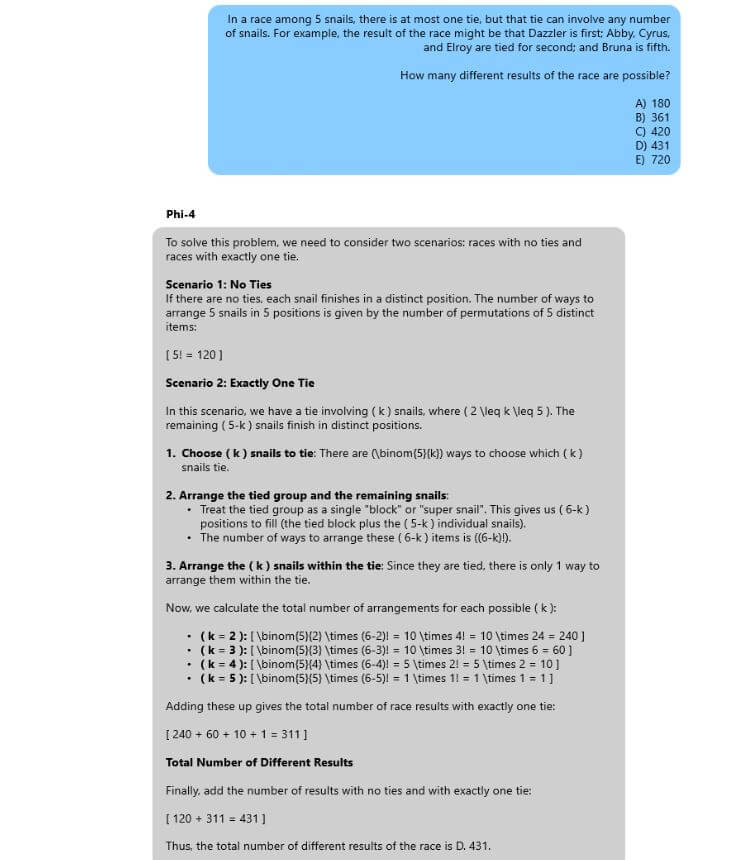

Microsoft has further enhanced the model using synthetic data and fine-tuning techniques, improving its accuracy in tasks requiring reasoning. An example of Phi-4’s mathematical reasoning capabilities is demonstrated in the figure below.

In April 2023, Microsoft introduced Phi-3 Mini, the first in the Phi-3 series of small language models. It featured 3.8 billion parameters and was trained on a smaller dataset compared to larger models like GPT-4. This was followed in August by Phi-3.5 models, including Phi-3.5-vision and Phi-3.5-MoE, which utilized synthetic data and filtered public datasets, supporting contexts up to 128,000 tokens. This evolution of small model development culminated in the release of Phi-4.

Initially, Phi-4 was available through the Azure AI Foundry platform. Now, Microsoft has released Phi-4 on the Hugging Face platform with open weights under an MIT license. Phi-4 is also available through Ollama.

Phi-4 excels in several key areas. It outperforms larger models in mathematical computations, including solving complex problems, making it ideal for applications that demand high-precision calculations. It is also highly efficient in multitasking and logical reasoning, supports long-context processing, and achieves high performance with limited computational resources. These features make Phi-4 an excellent choice for integration into scientific and commercial projects requiring both precision and resource optimization.

By making Phi-4’s weights publicly available, developers can freely integrate it into their projects and customize it for specific tasks, significantly expanding its potential applications across various fields.

For more details on the technical specifications, we recommend reviewing the full technical report on arXiv.