Alibaba released more than 100 open-source AI models including Qwen 2.5 72B which beats other open-source models in math and coding benchmarks.

Much of the AI industry’s attention in open-source models has been on Meta’s efforts with Llama 3, but Alibaba’s Qwen 2.5 has closed the gap significantly. The freshly released Qwen 2.5 family of models range in size from 0.5 to 72 billion parameters with generalized base models as well as models focused on very specific tasks.

Alibaba says these models come with “enhanced knowledge and stronger capabilities in math and coding” with specialized models focused on coding, maths, and multiple modalities including language, audio, and vision.

Alibaba Cloud also announced an upgrade to its proprietary flagship model Qwen-Max, which it has not released as open-source. The Qwen 2.5 Max benchmarks look good, but it’s the Qwen 2.5 72B model that has been generating most of the excitement among open-source fans.

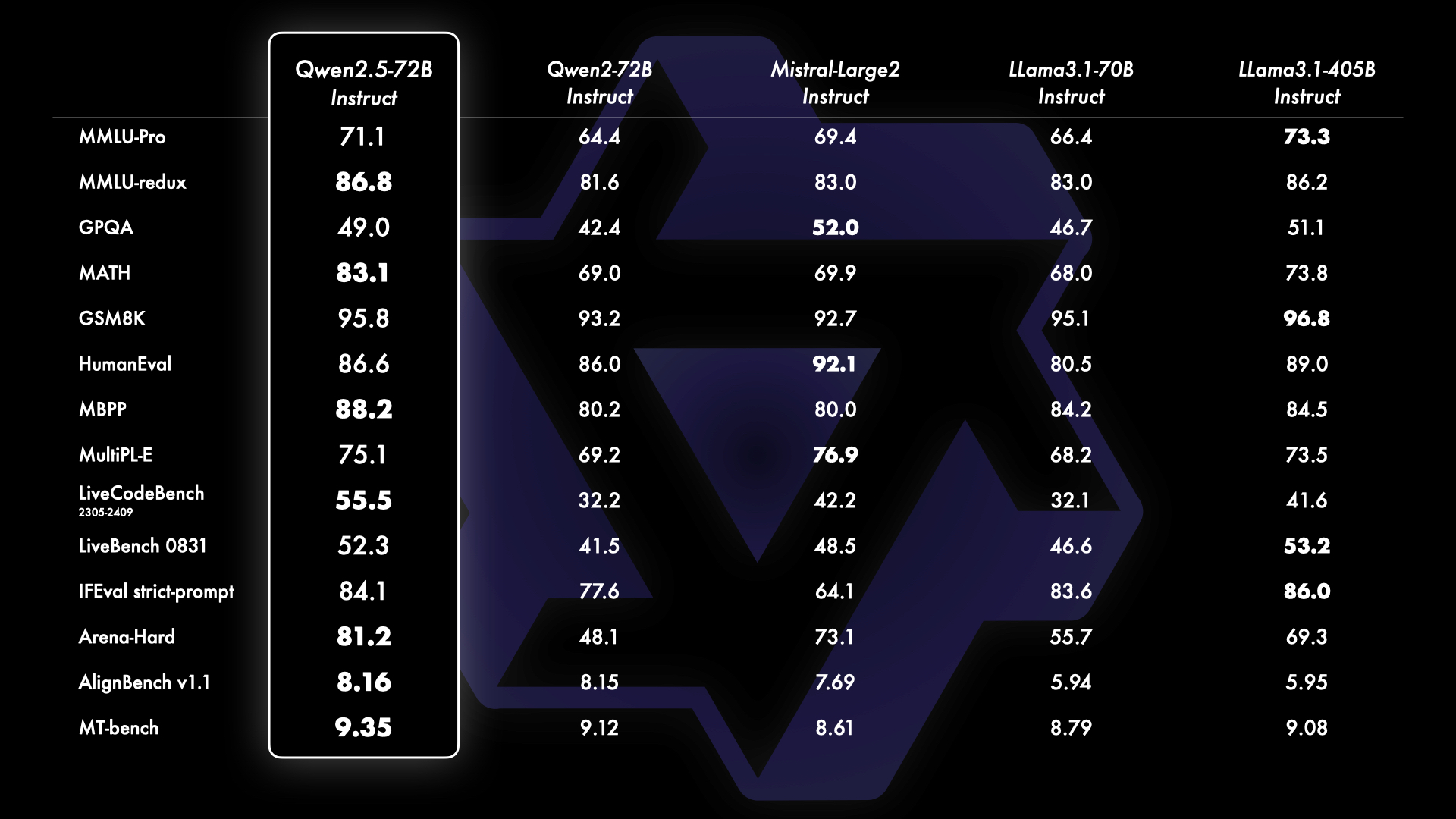

The benchmarks show Qwen 2.5 72B beating Meta’s much larger flagship Llama 3.1 405B model on several fronts, especially in math and coding. The gap between open-source models and proprietary ones like those from OpenAI and Google is also closing fast.

Early users of Qwen 2.5 72B show the model coming just short of Sonnet 3.5 and even beating OpenAI’s o1 models in coding.

Open source Qwen 2.5 beats o1 models on coding 🤯🤯

Qwen 2.5 scores higher than the o1 models on coding on Livebench AI

Qwen is just below Sonnet 3.5, and for an open-source mode, that is awesome!!

o1 is good at some hard coding but terrible at code completion problems and… pic.twitter.com/iazam61eP9

— Bindu Reddy (@bindureddy) September 20, 2024

Alibaba says these new models were all trained on its large-scale dataset encompassing up to 18 trillion tokens. The Qwen 2.5 models come with a context window of up to 128k and can generate outputs of up to 8k tokens.

The move to smaller, more capable, and open-source free models will likely have a wider impact on a lot of users than more advanced models like o1. The edge and on-device capabilities of these models mean you can get a lot of mileage from a free model running on your laptop.

The smaller Qwen 2.5 model delivers GPT-4 level coding for a fraction of the cost, or even free if you’ve got a decent laptop to run it locally.

We have GPT-4 for coding at home! I looked up OpenAI?ref_src=twsrc%5Etfw”>@OpenAI GPT-4 0613 results for various benchmarks and compared them with @Alibaba_Qwen 2.5 7B coder. 👀

> 15 months after the release of GPT-0613, we have an open LLM under Apache 2.0, which performs just as well. 🤯

> GPT-4 pricing… pic.twitter.com/2szw5kwTe5

— Philipp Schmid (@_philschmid) September 22, 2024

In addition to the LLMs, Alibaba released a significant update to its vision language model with the introduction of Qwen2-VL. Qwen2-VL can comprehend videos lasting over 20 minutes and supports video-based question-answering.

It’s designed for integration into mobile phones, automobiles, and robots to enable automation of operations that require visual understanding.

Alibaba also unveiled a new text-to-video model as part of its image generator, Tongyi Wanxiang large model family. Tongyi Wanxiang AI Video can produce cinematic quality video content and 3D animation with various artistic styles based on text prompts.

The demos look impressive and the tool is free to use, although you’ll need a Chinese mobile number to sign up for it here. Sora is going to have some serious competition when, or if, OpenAI eventually releases it.