As AI language models become increasingly sophisticated, they play a crucial role in generating text across various domains. However, ensuring the accuracy of the information they produce remains a challenge. Misinformation, unintentional errors, and biased content can propagate rapidly, impacting decision-making, public discourse, and user trust.

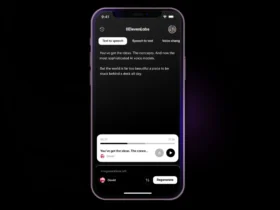

Google’s DeepMind research division has unveiled a powerful AI fact-checking tool designed specifically for large language models (LLMs). The tool, named SAFE (Semantic Accuracy and Fact Evaluation), aims to enhance the reliability and trustworthiness of AI-generated content.

SAFE operates on a multifaceted approach, leveraging advanced AI techniques to meticulously analyze and verify factual claims. The system’s granular analysis breaks down information extracted from long-form texts generated by LLMs into distinct, standalone units. Each of these units undergoes rigorous verification, with SAFE utilizing Google Search results to conduct comprehensive fact-matching. What sets SAFE apart is its incorporation of multi-step reasoning, including the generation of search queries and subsequent analysis of search results to determine factual accuracy.

During extensive testing, the research team used SAFE to verify approximately 16,000 facts contained in outputs given by several LLMs. They compared their results against human (crowdsourced) fact-checkers and found that SAFE matched the findings of the specialists 72% of the time. Notably, in instances where discrepancies arose, SAFE outperformed human accuracy, reaching a remarkable 76% accuracy rate.

SAFE’s benefits extend beyond its exceptional accuracy. Its implementation is estimated to be roughly 20 times more cost-efficient than relying on human fact-checkers, making it a financially viable solution for processing the vast amounts of content generated by LLMs. Additionally, SAFE’s scalability makes it well-suited for addressing the challenges posed by the exponential growth of information in the digital age.

While SAFE represents a significant step forward for LLMs further development, challenges remain. Ensuring that the tool remains up-to-date with evolving information and maintaining a balance between accuracy and efficiency are ongoing tasks.

DeepMind has made the SAFE code and benchmark dataset publicly available on GitHub. Researchers, developers, and organizations can take advantage of its capabilities to improve the reliability of AI-generated content.

Delve deeper into the world of LLMs and explore efficient solutions for text processing issues using large language models, llama.cpp, and the guidance library in our recent article “Optimizing text processing with LLM. Insights into llama.cpp and guidance.”