As we head into 2025, the world of Artificial Intelligence is changing fast. New rules and guidelines are coming out to tackle big issues like data safety, privacy, fairness, and openness. This change is key to making AI more responsible.

We’re seeing big changes in AI ethics with new standards and rules. These updates focus on data privacy and fairness. For more on AI ethics, check out our in-depth article on the ethics of artificial intelligence.

Key Takeaways

- New guidelines and regulations are transforming the AI landscape in 2025.

- The focus is on addressing concerns around data responsibility, privacy, fairness, and transparency.

- Emerging standards aim to create a more ethical AI ecosystem.

- Regulatory bodies and industry leaders are releasing key frameworks.

- Responsible AI development and deployment are becoming increasingly important.

The Transformation of AI Ethics in 2025

The year 2025 is a key moment for AI ethics. It’s shaped by new tech and laws. Knowing about recent laws and who’s pushing for them is key.

Recent Regulatory Breakthroughs

The International AI Ethics Accord is a big step towards working together on AI ethics. It wants to make sure AI is developed and used responsibly all over the world.

The International AI Ethics Accord

The accord sets out key ethics for AI, like being clear, accountable, and focused on people. This helps companies make sure their AI meets global AI ethics 2025 standards.

Regional Implementation Variations

Even though the accord is a global plan, how it’s put into action will differ. This is because of different laws and cultures. Companies need to adjust their AI plans for these differences.

Key Stakeholders Driving Change

Government actions and industry groups are key in shaping AI ethics 2025. They’re working on rules and standards for responsible AI.

Government Initiatives

Governments are setting clear rules for AI. This is important for making sure AI is used right across all industries.

Industry Consortium Contributions

Industry groups are also helping a lot. They’re creating best practices and standards for AI. Their work is vital for the future of AI ethics.

Core Principles Defining Modern AI Ethics

Modern AI ethics are built on three key principles: transparency, accountability, and a human-centered approach. These are vital for making AI systems trustworthy, fair, and good for society.

Transparency Requirements for AI Systems

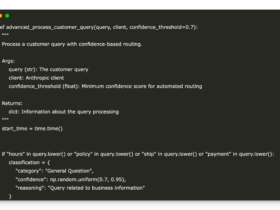

Transparency in AI means we can see how these systems decide things. This is about explainability standards for complex models. These standards help us understand AI decisions.

Explainability Standards for Complex Models

Explainability standards are key for complex AI models. They let developers grasp and share how these models make choices. This is very important for situations where AI decisions can greatly affect people.

Documentation and Disclosure Protocols

Good documentation and disclosure are key for transparency. They make sure everyone knows about AI systems, including what they can do, what they can’t, and any biases they might have.

Accountability Frameworks

Accountability frameworks are being created. They make sure people and groups are held responsible for AI’s effects.

Liability Distribution Models

Liability distribution models are part of accountability frameworks. They help figure out who is to blame when AI causes problems or doesn’t work right.

Certification and Compliance Mechanisms

Certification and compliance mechanisms are also important. They help make sure AI systems meet standards and developers follow best practices.

Human-Centered Design Mandates

Human-centered design puts people first in AI development. It makes sure AI systems are made to help people and society.

By focusing on these core principles, we can make sure AI is developed responsibly. This way, we consider its effects on individuals and society.

Addressing AI Bias and Fairness: New Standards and Solutions

AI systems are now a big part of our lives. It’s key to make sure they’re fair and don’t show bias. As we keep working on AI, we must tackle bias and fairness issues. This will help us build AI we can trust.

Algorithmic Fairness Requirements

New rules are being made to make AI fair. These rules aim to prevent AI from unfairly treating certain groups. They help make sure AI decisions are fair and equal for everyone.

Protected Attribute Considerations

We’re focusing on reducing bias in AI. This includes looking at things like race, gender, age, and disability. By doing this, we can make AI that treats everyone fairly.

Outcome Equality Measurements

It’s important to check if AI is fair. We use special tests to see if AI decisions are just. This ensures that everyone gets a fair outcome.

Testing and Validation Protocols

Testing AI is key to making sure it’s fair. We use strict tests to find and fix bias in AI. This helps make sure AI decisions are fair.

Bias Detection Tools and Methodologies

Tools to find bias are very important. They help us spot and fix unfairness in AI. This makes AI more fair and just for everyone.

Third-Party Auditing Requirements

Having others check AI is a good idea. Independent auditors help make sure AI is fair. This adds an extra layer of trust in AI systems.

Diverse Data Collection Standards

Using diverse data is important. It helps prevent bias in AI. By using data from all kinds of people, AI can be fair and work well.

Representation Requirements

It’s important to have data that shows all kinds of people. This helps avoid bias and makes AI fair. It ensures AI works well for everyone.

Data Quality Assurance Processes

Keeping data accurate is vital. Good data quality helps make AI reliable and fair. This is important for trustworthy AI.

Industry Implementation of Responsible AI

The industry is moving towards responsible AI, driven by rules and what customers want. Companies face challenges but also see big wins.

Corporate Adoption Challenges and Solutions

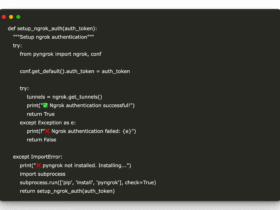

Adopting responsible AI is hard because it needs a lot of resources. Companies need to set up teams for AI ethics and follow rules. This makes sure AI is fair and open.

Resource Requirements for Compliance

Businesses must spend on AI auditing tools, ethical frameworks, and monitoring. This helps spot biases and lowers AI risks.

Timeline Expectations for Implementation

How fast companies adopt responsible AI varies. It depends on their size and AI complexity. They will do this over the next few years. Finance and healthcare are leading because their AI is riskier.

Case Studies: Ethical AI Deployment Success Stories

Many companies are doing well with ethical AI. For example,

“AI in healthcare has cut down on mistakes and made medicine more personal.”

Healthcare Sector Innovations

In healthcare, AI helps with medical images, predicting outcomes, and making workflows better. These changes help patients and make healthcare more efficient.

Financial Services Transformations

The financial world uses AI for managing risks, finding fraud, and helping customers. By using ethical AI, banks can build trust and avoid fines.

Economic and Competitive Implications

Using responsible AI can really help a company. It can give a competitive edge, improve reputation, and lower risks. Those who start early will benefit the most.

Conclusion: The Evolving Future of AI Ethics

AI ethics will become more important as AI grows. We must tackle AI bias and fairness to make AI systems fair and transparent.

There have been big steps forward in AI ethics2025. We’re seeing new rules, better practices, and tech improvements. By focusing on AI ethics, we can create a future where AI helps everyone.

The world of AI ethics is changing fast. New rules and solutions are coming to handle tough issues. As AI ethics keeps growing, we’ll see more focus on using AI responsibly. This will lead to new ideas and growth, while keeping risks low.