The AICorr team dives into the concept of retrieval augmented generation in artificial intelligence, by answering the question “What is RAG in AI?”.

Table of Contents:

RAG

As we know by now, artificial intelligence has advanced significantly in the last several years. The ability to produce relevant, accurate, and contextually enriched responses has become essential for improving user experiences. As such, Retrieval-Augmented Generation (RAG) is a breakthrough in this domain, combining the strength of information retrieval systems with the power of language generation models. The RAG approach is especially effective for applications requiring substantial knowledge, accuracy, or real-time data integration. Such as customer service, search engines, and domain-specific question-answering systems. In this article, we’ll explore what RAG is, how it works, and why it holds transformative potential for AI applications across industries.

What is Retrieval-Augmented Generation?

Retrieval-Augmented Generation, or RAG, is a hybrid approach in natural language processing (NLP) that unites two core components: retrieval of relevant information from external sources and generation of a coherent, natural language response. Traditional language models, like GPT or BERT-based systems, rely on knowledge embedded during their training phases. While these models perform well with general-purpose queries, they may fall short when responding to questions requiring specific or updated information outside of their training data. RAG addresses this limitation by adding a retrieval step to pull in up to date or domain-specific knowledge, which it then uses to generate a more informed response.

We can get a better understanding through 2 simple analogies.

- First, think of it like a student answering questions in an open-book exam. Instead of relying only on what the student (the AI model) remembers, they can quickly look up additional information from textbooks (the retrieval system) to give more accurate, detailed answers. This approach is useful because it lets the AI pull relevant data from a large knowledge base. Then use that information to generate responses that are more informative and relevant than if it just relied on pre-trained knowledge.

- Second, imagine you have a friend who answers questions by first checking a library of books. Then using that information to give you a thoughtful answer. In RAG, the “library” is a database of knowledge, and the AI retrieves relevant information from it before generating a response. This way, instead of relying only on what it knows (pre-trained data), it can pull in current or more specific information, making responses more accurate and relevant to complex or niche questions.

The Mechanics of RAG: How it Works

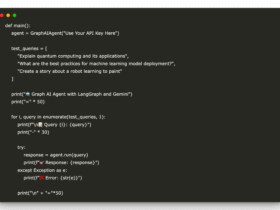

The RAG approach comprises two stages: retrieval and generation.

- The Retrieval Component

- The retrieval component is responsible for fetching relevant information from an external knowledge base, often referred to as a retrieval corpus. This corpus can be a curated collection of documents. Such as news articles, scholarly papers, product manuals, or any other type of structured or unstructured data relevant to the task at hand.

- When a query is input, a retrieval model, often based on semantic embeddings or advanced search algorithms, identifies documents or passages that may contain pertinent information. Models like Dense Passage Retrieval (DPR), BM25, or those based on vector similarity are commonly used to rank these documents based on relevance to the input query.

- This process allows the RAG system to efficiently scan massive datasets and pull in relevant chunks of information. Even if the data is frequently updated or highly domain-specific.

- The Generation Component

- Once relevant information is retrieved, it is passed to a generation model, which synthesises the information into a coherent response. This generation model is typically a large language model, such as GPT-based models, which can interpret and merge retrieved data with the original query context.

- By integrating retrieved passages into its response, the generation model can produce answers that are far more contextually accurate and information-rich than it would if relying solely on pre-existing, static knowledge within the model itself.

The entire RAG process operates as a pipeline: the query first triggers the retrieval stage, pulling in the top documents or passages related to the input, which are then fed into the language generation stage. This iterative combination enables RAG models to remain adaptable, leveraging up-to-date, domain-specific data without needing frequent retraining.

Applications of RAG: Where It Shines

The Retrieval-Augmented Generation model shines in various applications, especially where accuracy, relevance, and real-time adaptability are paramount.

- Question Answering Systems

- In domains like medicine, law, or finance, questions often require specific and up-to-date information. RAG models can retrieve information from specialised sources, such as medical journals or legal databases. As such, allowing them to provide accurate, nuanced answers that traditional language models may not have the internal knowledge to address.

- Customer Support and Chatbots

- Customer support systems require accurate, real-time responses to user queries. RAG can retrieve the latest product information, service updates, or policy details from company knowledge bases. As such, enhancing the customer support experience by giving precise answers tailored to the user’s needs.

- Content Generation and Summarisation

- For businesses producing reports, summaries, or news updates. RAG models can retrieve relevant information from large data sources and then distill it into concise, human-readable content. This application is particularly useful for generating summaries of complex documents or articles. As a result, creating a bridge between vast data repositories and accessible content.

- Search and Recommendation Engines

- RAG also improves search engines and recommendation systems by adding a layer of intelligent summarisation. Instead of merely listing results, a RAG-powered search engine can produce informative summaries of the most relevant results. This as a result, enhances user experience by providing answers rather than just links.

Benefits of RAG: Why Use This Hybrid Model?

The Retrieval-Augmented Generation approach offers distinct benefits over traditional language models and standalone retrieval systems.

- Enhanced Relevance and Accuracy

- RAG’s retrieval component enables it to pull in highly relevant, current information that may not be part of the language model’s training data. This feature allows RAG to generate responses that are not only more accurate but also contextually enriched and tailored to the user’s query.

- Scalability and Adaptability

- With RAG, companies and organisations can continuously update the external retrieval corpus without retraining the entire model, allowing it to adapt to new information quickly. This adaptability makes it ideal for environments where information changes frequently, such as news, scientific research, and product databases.

- Resource Efficiency

- RAG can efficiently extend the knowledge of large language models without the high cost of constant retraining. By retrieving relevant information only as needed, RAG maintains high performance while reducing computational demands.

Challenges of RAG: Balancing Complexity and Quality

Despite its many advantages, the RAG model also presents certain challenges. Let’s explore some of them below.

- Quality of Retrieved Information

- The generation stage’s accuracy and relevance depend heavily on the quality of the retrieved data. If irrelevant or incorrect information is retrieved, it can negatively impact the response. Ensuring the retrieval model’s performance is crucial for maintaining the quality of the generated text.

- Computational Complexity

- RAG models require more computational power than traditional language models, as they involve both a retrieval and a generation process. This dual-component setup can be resource-intensive, especially for large datasets or applications needing real-time responsiveness.

- Risk of Misinformation

- If the retrieval corpus contains outdated or erroneous information, RAG models may unintentionally propagate misinformation. Ensuring the reliability of the external data source is critical to prevent inaccurate outputs.

The Future of RAG: Expanding AI’s Knowledge Boundaries

As Retrieval-Augmented Generation models become more refined, they will continue to bridge gaps between static model knowledge and dynamic, real-world data. By offering accuracy, adaptability, and efficiency, RAG is poised to be a vital tool. A vital tool in various fields, ranging from customer support to scientific research, where relevance and precision are indispensable. While there are challenges to address, the RAG approach’s benefits in producing richer, more context-aware responses make it a powerful enhancement to language generation models.

Ultimately, RAG represents a key step in the evolution of AI. As such, enabling systems to move beyond pre-trained data and interact meaningfully with the world’s ever-growing body of information.